Part 4. Proxmox to go

The one drawback of a Proxmox cluster is that it's fairly stationary. Proxmox VMs can't really be taken on a laptop running a desktop OS and used on the road and then put back on the larger hosts. Also I can't carry the large Lenovo desktops I'm using as proxmox hosts in my backpack. To alleviate this, I recently bought an Intel NUC. It's great. Quad core i7, 32 gigs of ram, and 1TB of fairly fast SSD in a box that fits any bag or backpack. In terms of cost per performance ratio, it's more cost effective to buy a fairly low spec laptop for around 1k and spend another 1k for a high spec NUC than to buy a single laptop with matching performance.

My main use cases (that I can currently think of) for the NUC are:

- hide it as a network implant somewhere safe to do what implants do, like reconnoiter

- when teaching in a workshop, host vulnerable targets, or provide attack machines to students over wifi

- take any part of my running servers with me. For example, the VM I play hackthebox with.

Hardware setup

Initially I configured Proxmox on the NUC the way I configured all the other nodes in my lab cluster. For the portable use though, there are a few challenges

- to access the NUC, or any of the guests it's running, my terminal (= my laptop) needs network access to the NUC

- the guest VMs need to access any outside network(s). I'm assuming wired ethernet exists. Depending on the case, they either want “direct” (bridged) access, or behind a NAT and firewall.

- the guest VMs should also be accessible from my terminal

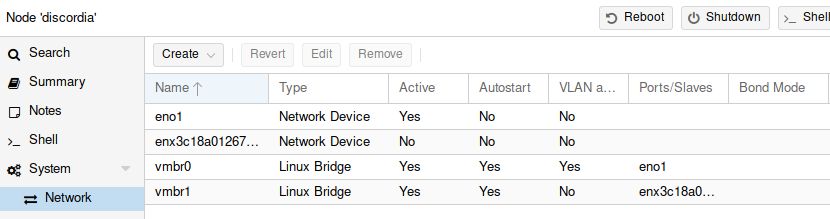

Anticipating this, I had bought a gigabit USB 3.0 ethernet adapter to go with the NUC. I configured the USB NIC as an ethernet bridge in the Proxmox web UI. (Create new bridge and assign it a physical interface matching the USB NIC). It looks like this:

If it's done correctly, /etc/network/interfaces should read like:

auto lo

iface lo inet loopback

iface eno1 inet manual

iface enx3c18a01267eb inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.REDACTED.REDACTED

netmask 24

gateway 192.168.REDACTED.REDACTED

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

auto vmbr1

iface vmbr1 inet manual

bridge-ports enx3c18a01267eb

bridge-stp off

bridge-fd 0

Now on the NUC node on Proxmox, vmbr0 is the physical ethernet on the NUC itself, and vmbr1 is the USB ethernet adapter. vmbr0 is used by the terminal to connect to the NUC and any guests it's running. vmbr1 is used by the guests running on the NUC to access the outside network.

pfSense for the road

When on the go, we need something on the NUC to act as a DHCP server for the guests and the terminal. I created a VM to run pfSense on the NUC. It is set up so that its LAN address space is the same as at the home lab. Remember that Proxmox can't hop to another IP address after it's installed and set up. I also enabled the DNS resolver so that my terminal can still access the guests by name rather than their IP addresses. Also, this pfsense doesn't set the default gateway to its clients: the guests need two IPs, one to be accessed at from the terminal, and another for the outside network. If I prefer the clients access the outside through NAT though, then I just reconfigure pfSense to also provide its default GW to the DHCP clients.

Magic

One challenge of this setup is that when the NUC boots up, it should check if it's in my home lab environment. If it is, it doesn't need to start the pfsense guest. If it is elsewhere, it should also start the pfsense guest so that the terminal and guests can get their internal IPs and possibly provide routing for the terminal and guests. To do this, I created yet another kludge.

I added a script on the NUC that checks if my home lab router is pingable, if it isn't, it starts the pfSense guest. The script reads:

root@discordia:~# cat startrouter.sh

#!/bin/bash

PATH=$PATH:/usr/sbin

sleep 15

if ping -q -c 4 192.168.9.1 ; then echo pingable ; else

pvecm e 1

qm set 900 --net0 bridge=vmbr0,model=virtio,firewall=1

qm set 900 --net1 bridge=vmbr1,model=virtio,firewall=1

qm start 900

fi

First it sleeps 15 seconds to wait for its network to actually be up. If the home lab router is pingable, the script exits. Otherwise it starts the pfsense (whose ID is 900). One more kludge is reassigning the network interfaces on the pfsense VM. This is because Proxmox seems to reassign the guest NICs to valid bridges only, and I'm running the NUC with the USB ethernet disconnected in my home lab, so that after a boot without the USB NIC being present, all guests either reassign their second NICs to the first onboard NIC or they just disappear from the guests.

Also note the pvecm e 1 above. It configures the cluster feature on Proxmox to be fine with only one node being pingable in the cluster. Without that, it refuses to start VMs as it can't reach the other cluster members.

The script above is run after a boot by placing this in the crontab (crontab -e):

@reboot /root/startrouter.sh

Disable automatic upgrades

Note that in the cloud.cfg file, in the cloud_final_modules section, there's a line that reads

- package-update-upgrade-install

This makes the guest upgrade all packages on it. It's fine when the NUC is in my home lab, but when somewhere else with limited or no internet, it prevents me from instantiating new VMs as the upgrade hangs or takes too long with no bandwidth. To alleviate this I created another template that has this line removed from the cloud.cfg file so the guest doesn't upgrade itself. (To do this, I created a full clone of the original template, then edited this file on the clone, then converted it into a template again. I nmaed these kali-template and kali-template-noupdate.)

Some scripts

This script makes sure a guest only has one NIC:

#!/bin/bash

vmid=$1

if [ -z "$vmid" ] ; then

echo usage: $0 vmid

exit

fi

pvesh set /nodes/discordia/qemu/$vmid/config --delete net1

The script above uses the pvesh to remove the net interface from the VM. The qm command can only add and reconfigure network interfaces but not remove. Above, replace discordia with the node name of your node.

The following script makes sure a guest has two nics, and the second NIC matches the USB NIC bridge on the NUC:

#!/bin/bash

vmid=$1

if [ -z "$vmid" ] ; then

echo usage: $0 vmid

exit

fi

qm set $vmid --net1 bridge=vmbr1,model=virtio,firewall=1

I'm calling these set1inet.sh and set2inet.sh. Before starting guests on my NUC, I call either of these depending on if I'm at the home lab or elsewhere to make sure the guests have their NICs configured correctly.